Volume 23 article 1300 pages: 647-655

Received: Dec 18, 2024 Accepted: Sep 12, 2025 Available Online: Sep 12, 2025 Published: Dec 14, 2025

DOI: 10.5937/jaes0-55518

A STUDY ON DETECTING THE WELD SPOT FOR BUILDING AN AUTOMATIC WELDING ROBOT

Abstract

The automatic robots have become an important trend in the industry to improve the productivity and performance such as introducing welding robots for the welding process. The automatic welding robot helps increase production efficiency and reduces risks and labor costs. This study proposes an advanced method by incorporating the YOLOv8 deep learning network into an automatic welding robot. The task of the YOLOv8 model is to detect and monitor welds in real time through a camera system. This helps automate the welding process by providing information about the position and shape of the weld points. Welding coordinate values are transmitted and the welding tip is adjusted to target specific welding points, creating an efficient and precise automated welding process. The results are useful for the development and application of automatic robot and artificial intelligence (AI) in the field of welding and industrial production. By combining welding technology and artificial intelligence, the welding industry will continue to progress and modernize for contributing to the sustainable development of global manufacturing.

Highlights

- Real-time weld-seam segmentation using YOLOv8.

- Automatic conversion of weld masks to robot coordinates.

- Improved weld-spot accuracy for autonomous welding.

- Enhanced automation with reduced manual intervention.

Keywords

Content

1 Introduction

The automatic robots (AR) have become an indispensable part of the production processes to improve the performance and productivity such as introducing robot for industrial applications [1], using welding robots for welding process [2, 3], developing UAV for navigation duty [4]. AR shows good characteristics of flexibility, precision, safety, and efficiency for industrial applications and human services [5]. The automatic welding robot (AWR) is not only an advancement in technology but also an effective approach to increase production productivity and reduce risks and labour costs such as using welding robot for working in underwater environment [6], developing mobile welding robot for nuclear working condition [7], and introducing welding robot for unstructured environment [8]. Despite significant progress, there are still challenges that need to be overcome in the development of welding robot control systems. The welding robot has been received many concerns of researchers to develop control methods for its applications such as developing systems based on motion control [9], 3D simulation [10], and machine learning [11]. These methods all have their advantages but still face certain limitations when applied in real industrial environments. One of the most important challenges is the accuracy of the welding process [12]. Although modern control systems can be tuned to achieve high levels of precision, maintaining stability and uniformity under all operating conditions remains a problem. In addition, the applicability of welding robot control methods in real industrial environments is also an important issue. Working conditions are often varied and not always stable, and this can affect system performance. The ability to train and deploy these systems also requires significant investment on the part of the business, from employee training to integration into existing production processes. The robot control has brought many benefits to the manufacturing industry; the continuing research and development are needed to overcome challenges and optimize performances of the actual production process.

One of the biggest duties of automated welding robots is the ability to accurately identify weld points on work surfaces. Accuracy in determining the welding point not only affects the quality of the final product but also ensures the safety and performance of the welding process. Many object-tracking algorithms based on deep learning have been proposed. Initially, the YOLO algorithm achieved impressive speed but not high accuracy, especially when detecting small objects and locating overlapping objects. To overcome these limitations, the following versions of YOLO have been developed, each aimed at improving detection accuracy and efficiency. YOLOv2 has been introduced innovations such as anchor boxes and multi-scale training, enhancing the ability to accurately position and handle objects of different sizes [13]. YOLOv3 has been improved object detection by using a feature pyramid network (FPN) and multiple detection scales providing better detection at different resolutions [14, 15]. These improvements have boosted YOLO's performance in many scenarios. YOLOv4 brings more advanced architectural modifications [16] such as the CSPDarknet-53 [17] and PANet backbone [18], improving real-time object detection accuracy. YOLOv5 has been focused on optimizing the architecture to speed up inference while maintaining accuracy [19]. YOLOv6 and YOLOv7 have been continued to improve by exploring new approaches [20, 21]. YOLOv8 has been introduced to industrial application with effective innovations [22]. YOLOv8 is expected to leverage the strengths of previous versions and explore new approaches to enhance real-time object detection.

Basically, YOLOv8 is not only used as an object detector; it is also the theoretical core of the perception module. By using a segmentation variant of YOLOv8, the model generates pixel-level weld seam masks, allowing us to formulate weld point extraction as a geometric inference problem rather than relying solely on bounding box localization. The choice of this approach is based on the assumption that weld seams exhibit visually distinguishable textures and shapes, which can be learned through convolutional feature representations.

This study advocates the application of the YOLOv8 model, recognized as an advanced solution in the field of object detection, to solve the important task of automatically identifying weld points during the welding process. This effort appears at the intersection of welding technology and artificial intelligence, offering a promising path to revolutionize conventional approaches to welding processes and increase automation in the manufacturing sector. By exploiting the capabilities of advanced object detection techniques contained in the YOLOv8 model, the work aim to overcome the limitations of traditional manual methods and conventional automatic systems in weld spot recognition.

Furthermore, the proposed methodology relies on a calibrated camera-to-robot transformation model. We assume that once the weld seam is segmented, geometric features extracted from the mask track such as its centerline or boundary pixels that can be mapped into the robot coordinate system through homogeneous transformations. This assumption allows the segmented weld points to be used directly for trajectory generation.

Integrating advanced machine learning algorithms with welding technology not only improves the accuracy and reliability of weld spot detection but also paves the way for unprecedented levels of efficiency and productivity in industrial environments. The importance of this research lies in its ability to catalyse transformational changes in the manufacturing landscape. By leveraging the power of artificial intelligence, the paper strives to streamline and optimize welding processes, thereby minimizing inefficiencies, minimizing errors, and improving overall quality in operations production activity. Through the seamless combination of welding technology and advanced machine learning methods, we aim to redefine the industrial welding and manufacturing paradigm, ushering in an era of automation, precision, and high performance. Finally, the research seeks to make tangible contributions to improving efficiency and quality in industrial welding and manufacturing processes. By unleashing the synergy between welding technology and artificial intelligence, the work strives to empower manufacturers with innovative tools and approaches that drive operational excellence, fuelling sustainable growth sustainability and propelling the industry towards unprecedented levels of competition and success.

2 Materials and methods

2.1 The structure of an automatic welding robot

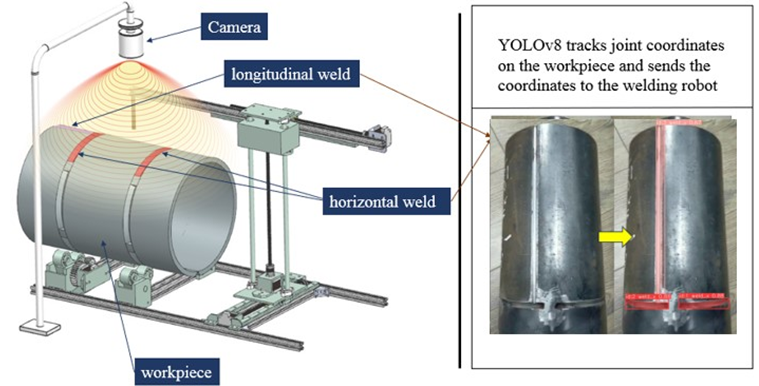

Figure 1 illustrates a basic structure of an automatic welding robot. During the welding process, an important part is detecting the weld so that the robot can perform the welding process accurately and effectively. To do this, the robot has equipped a camera system, integrated with an advanced image recognition algorithm, YOLOv8. This camera system has an important task in identifying welds on pipe surfaces. When a weld is detected, the camera will send its coordinates to the welding robot to continue the work. The two main types of welds for automated welding robots have identified to handle including vertical welds and horizontal welds. This helps optimizing the welding process for both cases. Once identifying the type of weld, the robot arm will automatically control the position of the weld and start the welding process. The submerged arc welding is one of the preferred welding methods, especially in industrial applications, because it offers high performance and uniform welding quality [23]. In particular, this method also helps the camera not be affected by disturbing agents such as welding fumes or circuit lights during the process of monitoring the weld. The automated welding robot system is not only a combination of technology and automation but also a prime example of innovation in the modern welding industry.

Fig. 1. A basic structure of an automatic welding robot

2.2 YOLOv8

You Only Look Once (YOLO) is structured by three main components including the main trunk, the neck, and the detection head [24, 25]. Each component plays a crucial role in achieving accurate and efficient object detection. YOLOv1 is known for its groundbreaking real-time object detection capabilities. It utilized the Darknet-19 backbone architecture, enabling simultaneous prediction of multiple bounding boxes and class probabilities. YOLOv2, also known as YOLO9000, introduced enhancements in both accuracy and speed by incorporating anchor boxes for better localization and scaling predictions across different objects. YOLOv3 is further improved accuracy and speed by utilizing a feature pyramid network (FPN) for multi-scale feature extraction, resulting in better detection of small objects and improved bounding box regression. Then came YOLOv4, a significant advancement over its predecessors, featuring backbone architectures like CSPDarknet53 and incorporating techniques like Mish activation function and PANet for improved performance [26]. YOLOv5 took a different approach, focusing on simplicity and efficiency with custom lightweight backbone architectures and simplified feature extraction methods. Looking ahead to hypothetical versions, YOLOv6 could potentially explore more efficient backbone architectures like EfficientNet and incorporate advanced feature extraction techniques such as attention mechanisms or transformer layers [27]. YOLOv7 might experiment with novel backbone architectures optimized for object detection tasks and explore advanced feature extraction methods like self-attention or capsule networks. YOLOv8 leverages state-of-the-art backbone architectures, such as Vision Transformer (ViT) or other transformer-based models adapted for object detection [28]. Feature extraction methods would likely involve transformer-based approaches, allowing for more efficient capture of long-range dependencies and semantic information. Additional key features might focus on addressing specific challenges in object detection tasks, such as handling occlusions or fine-grained object recognition, by incorporating specialized modules or attention mechanisms tailored to these tasks. The structural diagram of YOLOv8 remains akin to the design of CSP in YOLOv7. The backbone, the first part of the YOLOv8 network, extracts features from input images, often constructed using deep convolutional layers. The neck, connecting the backbone and head, combines information from feature maps of different resolutions to enhance object recognition ability through convolutional and pooling layers. Finally, the head predicts the location and class of objects present in the image using convolutional and pooling layers, followed by thresholding and non-maximum suppression operations to specify and mark objects on the output image. Through continuous iterations, the YOLO series aims to enhance speed, accuracy, and efficiency in object detection tasks, introducing innovations in architecture and feature extraction techniques [29, 30].

2.3 Dataset

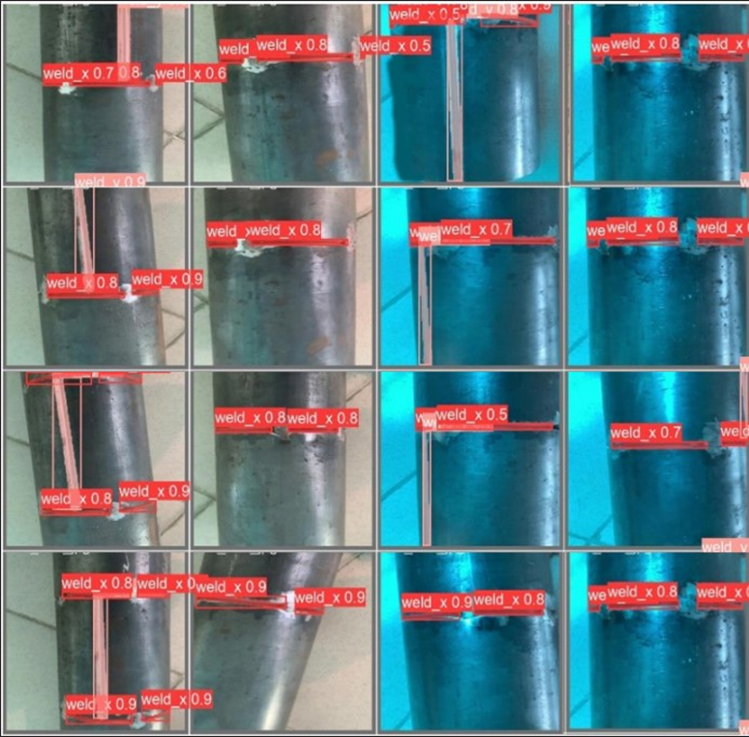

Our dataset, comprising 980 images captured by the camera, offers a comprehensive representation of various welding scenarios encountered in real-world environments. Figure 2 exhibits a diverse spectrum of lighting conditions and environmental contexts, ensuring a rich and nuanced dataset for analysis. Within these images, weld spots exhibit a range of size ratios, reflecting the variability inherent in welding processes across different contexts and applications. To facilitate precise object detection and classification, we employ sophisticated labeling software, meticulously annotating each image to identify and categorize the weld spots. Horizontal weld lines are denoted as "Weld_X", while vertical weld lines are labeled as "Weld_Y", ensuring unambiguous identification of these critical features within the dataset. Upon completion of the annotation process, the labeled images undergo further organization to facilitate effective training and evaluation of object detection algorithms. Utilizing a systematic approach, it has partitioned the dataset into distinct subsets, adhering to a predefined ratio of 2:1 for the training and test sets respectively. This thoughtful division ensures that our models are trained on a diverse range of data while also providing a robust mechanism for assessing their performance on unseen samples.

Fig. 2. Some samples in the dataset

3 Results and discussions

To evaluate the results with the weld joint, we use the testing environment as shown in Table 1. Overall, the experimental setup is well equipped with high-performance hardware and software components, providing a suitable environment for conducting comprehensive evaluations of weld joint detection using the YOLOv8 model.

Table 1. Experimental environment

|

Parameters |

Experimental environment |

|

CPU |

Intel i7-11700 @2.50GHz |

|

GPU |

GeForce GTX 1660 SUPER, 6144MiB |

|

RAM |

32 GB |

|

OS |

Windows 10 Pro |

|

Python |

3.9.16 |

|

Framework |

Ultralytics YOLOv8.0.111, torch-2.0.1+cu118 |

Figure 3 shows the results of identifying horizontal and vertical welds. The test data set also included the images collected under different environmental conditions, thereby creating a diverse and complete data set. Specifically, for the horizontal weld point "Weld_X", the accuracy ranges from 80% to 90%. This shows that the YOLOv8 model can accurately and reliably identify and classify horizontal welding points across a variety of environmental conditions. However, for the vertical weld point "Weld_Y", the accuracy ranges from 70% to 80%, which is lower than that for the horizontal weld point. However, although the accuracy may not be as high, it still shows that the model is capable of identifying and classifying longitudinal weld points at an acceptable level. Overall, the experimental results on the test data set have demonstrated the impressive performance of the YOLOv8 model in identifying weld points under different environmental conditions. This is an important step forward in applying artificial intelligence to the welding industry, helping to optimize production processes and enhance product quality.

Fig. 3. Results of identifying horizontal and vertical welds

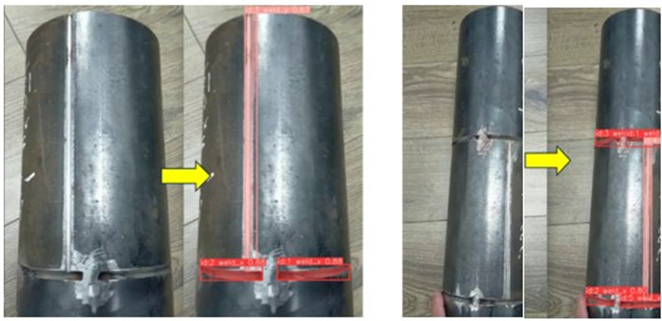

With positive results from the experiments, we conducted real-time performance validation of the model. Figure 4 shows that for horizontal welds, the model achieved an impressive accuracy of up to 88%. This is a remarkable achievement, highlighting the model's ability to accurately and reliably identify and classify horizontal weld points, even in real-time environments. When evaluating longitudinal welds, the results remain at a stable level, but are lower, fluctuating between 60% and 70%. This suggests that, in some cases, the model may have more difficulty recognizing longitudinal weld points in a real-time environment. Although the accuracy may not be as high, it still shows that the model is capable of providing useful information and decision support in real-life situations.

Fig. 4. Results of identifying horizontal and vertical welds in real-time

Fig. 5. The coordinates of the welds are continuously updated

Table 2 The welding point coordinates for the welding robot

|

Weld_Y |

Weld_X_1 |

Weld_X_2 |

|

X: 8, Y: 414 X: 6, Y: 414 X: 6, Y: 415 X: 5, Y: 417 X: 5, Y: 421 X: 6, Y: 423 X: 31, Y: 423 X: 32, Y: 424 X: 62, Y: 424 X: 63, Y: 423 X: 101, Y: 423 X: 102, Y: 421 X: 113, Y: 421 X: 114, Y: 420 X: 121, Y: 420 X: 122, Y: 429 X: 155, Y: 419 X: 156, Y: 417 X: 162, Y: 417 |

X: 162, Y: 129 X: 162, Y: 172 X: 161, Y: 173 X: 161, Y: 177 X: 162, Y: 178 X: 161, Y: 180 X: 161, Y: 228 X: 162, Y: 229 X: 162, Y: 241 X: 164, Y: 242 X: 164, Y: 247 X: 165, Y: 249 X: 165, Y: 263 X: 166, Y: 264 X: 166, Y: 300 X: 168, Y: 301 X: 168, Y: 313 X: 169, Y: 314 X: 169, Y: 318 X: 170, Y: 319 X: 170, Y: 326 X: 172, Y: 327 X: 172, Y: 336 X: 173, Y: 337 X: 173, Y: 344 X: 174, Y: 345 X: 174, Y: 352 X: 175, Y: 353 X: 175, Y: 399 X: 177, Y: 400 X: 177, Y: 408 X: 178, Y: 409 X: 178, Y: 412 X: 179, Y: 413 X: 179, Y: 416 X: 181, Y: 417 X: 181, Y: 425 X: 182, Y: 426 X: 182, Y: 435 X: 183, Y: 437 X: 183, Y: 450 X: 182, Y: 451 X: 182, Y: 455 X: 181, Y: 456 X: 181, Y: 459 X: 179, Y: 460 X: 179, Y: 463 |

X: 187, Y: 518 X: 188, Y: 525 X: 191, Y: 530 X: 192, Y: 545 X: 195, Y: 550 X: 198, Y: 566 X: 199, Y: 571 X: 200, Y: 583 X: 203, Y: 593 X: 204, Y:603 |

The coordinate output algorithm for the welding robot operates along the x and y axes, thereby controlling the robot to move precisely to the desired position on the Oxy coordinate system. As illustrated in Figure 4, the YOLOv8 algorithm is employed to detect weld seams within the camera's frame. In this specific instance, the system successfully identified a total of three welds, including two horizontal welds (Weld_X) and one vertical weld (Weld_Y). Once detected, the coordinates of the welds are continuously updated along the x and y axes within the camera's working space. This ensures that the system can accurately track and pinpoint the location of each weld, regardless of any changes that may occur during the welding process.

To obtain accurate welding coordinates for the robot, the system uses the pixel-based segmentation output directly from the YOLOv8 model. As illustrated in Figure 4, each weld seam is segmented into a continuous vertical or horizontal region, and every pixel belonging to that region is extracted. These values are then listed in Table 2, where each entry represents a pair of (X, Y) pixel coordinates captured from the camera frame. The parameters in Table 2 are not manually chosen but are automatically generated based on the following tuned settings of the detection pipeline:

- Confidence threshold: set to 0.7, ensuring that only high-confidence weld detections are processed for coordinate extraction.

- IoU threshold: set to 0.5 to balance sensitivity and precision when separating adjacent weld seams.

- Segmentation mask resolution: retained at the YOLOv8 default scale to preserve boundary details of the weld region, which directly affects the accuracy of pixel coordinate extraction.

Coordinate sampling strategy: for each segmented weld seam, all foreground pixels are collected instead of selecting only edges or centerlines. This allows the robot controller to compute a more stable and noise-free weld trajectory.

These tuned parameters ensure that the pixel coordinates accurately represent the real weld geometry. As a result, the robot receives a dense and consistent set of spatial points for each weld (Weld_Y, Weld_X_1, and Weld_X_2), enabling precise motion planning along the Oxy coordinate system.

The robot utilizes this data to guide its welding arm to the precise location of each weld on the workpiece. This process not only minimizes errors and enhances precision in the welding operation but also increases the robot's operational efficiency. With the capability to detect and process coordinates continuously and automatically, the welding system can function stably and effectively, ensuring the highest quality welds.

To further validate the effectiveness of the proposed method, comparative experiments were conducted with several state-of-the-art weld detection algorithms, including YOLOv5, Faster R-CNN. The results show that YOLOv8 achieved superior accuracy in weld seam detection while maintaining a significantly faster inference speed. These improvements allowed the system to generate more stable and precise pixel-based segmentation results, which directly enhanced the reliability of coordinate extraction for the robot. This comparative evaluation confirms that YOLOv8 offers a practical and high-performance solution for real-time robotic welding applications.

In summary, the integration of the YOLOv8 algorithm with the coordinate control system of the welding robot has created an intelligent and efficient automated welding process that meets the stringent requirements of modern industry. Figure 4 and Table 2 demonstrate the feasibility and effectiveness of this system, optimizing the production process and improving product quality.

4 Conclusions

This article has researched and tested the application of the YOLOv8 model in identifying welding points in the welding process. The results show that the YOLOv8 model is capable of accurately and reliably identifying and classifying horizontal welding spots while providing stable results in a real-time environment. Although the accuracy in identifying longitudinal weld points may be lower, the model is still reliable and provides useful information in real-life situations. This research marks an important step forward in the application of artificial intelligence to the welding industry while providing a solid basis for optimizing production processes and enhancing product quality. This research encourages the development of smart welding technology and the application of artificial intelligence in the manufacturing industry, thereby making meaningful contributions to economic and social development.

Acknowledgements

These authors thank Hanoi University of Industry, 298 Caudien Street, Hanoi 100000, Hanoi, Vietnam.

References

- N.-T. Tran, V.-L. Trinh, and C.-K. Chung. (2024, An Integrated Approach of Fuzzy AHP-TOPSIS for Multi-Criteria Decision-Making in Industrial Robot Selection. Processes 12(8).

- J. T. Kahnamouei and M. Moallem. (2024).Advancements in control systems and integration of artificial intelligence in welding robots: A review. Ocean Engineering, 312, 119294. https://doi.org/10.1016/j.oceaneng.2024.119294.

- P. Chi, Z. Wang, H. Liao, T. Li, X. Wu, and Q. Zhang. (2025).Towards new-generation of intelligent welding manufacturing: A systematic review on 3D vision measurement and path planning of humanoid welding robots. Measurement, 242, 116065. https://doi.org/10.1016/j.measurement.2024.116065.

- T. Van-Long and T. Ngoc-Tien. (2025).The Effective Algorithm for Navigation of Quadrotor UAV in Indoor Environment. Strojnícky časopis - Journal of Mechanical Engineering, 75 (2), 111-122. 10.2478/scjme-2025-0028.

- J. Bhattacharjee and S. Roy, "Welding Automation and Robotics," in Advanced Welding Technologies, ed, 2025, pp. 47-71.

- P. Chi, Z. Wang, H. Liao, T. Li, X. Wu, and Q. Zhang. (2024).Application of artificial intelligence in the new generation of underwater humanoid welding robots: a review. Artificial Intelligence Review, 57 (11), 306. 10.1007/s10462-024-10940-x.

- S. Yao, L. Xue, J. Huang, R. Zhang, P. Zhong, and L. Guo. (2025).Mobile welding robots under special working conditions: a review. The International Journal of Advanced Manufacturing Technology, 138 (9), 3907-3923. 10.1007/s00170-025-15813-3.

- Y. He, Z. Huang, H. Liu, J. Ye, Y. Lu, and X. Zhao. (2025).Self-adaptive seam detection framework for unmanned structural steel welding robots in unstructured environments. Automation in Construction, 175, 106221. https://doi.org/10.1016/j.autcon.2025.106221.

- Y. Zhu, X. He, Q. Liu, and W. Guo. (2022).Semiclosed-loop motion control with robust weld bead tracking for a spiral seam weld beads grinding robot. Robotics and Computer-Integrated Manufacturing, 73, 102254. https://doi.org/10.1016/j.rcim.2021.102254.

- B. Zhou, Y. Liu, Y. Xiao, R. Zhou, Y. Gan, and F. Fang. (2021).Intelligent Guidance Programming of Welding Robot for 3D Curved Welding Seam. IEEE Access, 9, 42345-42357. 10.1109/ACCESS.2021.3065956.

- J. Liu, T. Jiao, S. Li, Z. Wu, and Y. F. Chen. (2022).Automatic seam detection of welding robots using deep learning. Automation in Construction, 143, 104582. https://doi.org/10.1016/j.autcon.2022.104582.

- A. Mohammed and M. Hussain. (2025).Advances and Challenges in Deep Learning for Automated Welding Defect Detection: A Technical Survey. IEEE Access, 13, 94553-94569. 10.1109/ACCESS.2025.3574083.

- W. Liu, L. Ma, J. Wang, and H. xsChen. (2019).Detection of Multiclass Objects in Optical Remote Sensing Images. IEEE Geoscience and Remote Sensing Letters, 16 (5), 791-795. 10.1109/LGRS.2018.2882778.

- Z. Hong, T. Yang, X. Tong, Y. Zhang, S. Jiang, R. Zhou, et al. (2021).Multi-Scale Ship Detection From SAR and Optical Imagery Via A More Accurate YOLOv3. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 14, 6083-6101. 10.1109/JSTARS.2021.3087555.

- Z. Yang, Z. Xu, and Y. Wang. (2022).Bidirection-Fusion-YOLOv3: An Improved Method for Insulator Defect Detection Using UAV Image. IEEE Transactions on Instrumentation and Measurement, 71, 1-8. 10.1109/TIM.2022.3201499.

- C. K. B, R. Punitha, and Mohana, "YOLOv3 and YOLOv4: Multiple Object Detection for Surveillance Applications," in 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), 2020, pp. 1316-1321.

- N. Anwar, Z. Shen, Q. Wei, G. Xiong, P. Ye, Z. Li, et al., "YOLOv4 Based Deep Learning Algorithm for Defects Detection and Classification of Rail Surfaces," in 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), 2021, pp. 1616-1620.

- W. Yijing, Y. Yi, W. Xue-fen, C. Jian, and L. Xinyun, "Fig Fruit Recognition Method Based on YOLO v4 Deep Learning," in 2021 18th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), 2021, pp. 303-306.

- N. D. T. Yung, W. K. Wong, F. H. Juwono, and Z. A. Sim, "Safety Helmet Detection Using Deep Learning: Implementation and Comparative Study Using YOLOv5, YOLOv6, and YOLOv7," in 2022 International Conference on Green Energy, Computing and Sustainable Technology (GECOST), 2022, pp. 164-170.

- H. Jabnouni, I. Arfaoui, M. A. Cherni, M. Bouchouicha, and M. Sayadi, "YOLOv6 for Fire Images detection," in 2023 International Conference on Cyberworlds (CW), 2023, pp. 500-501.

- C. Dewi, A. P. S. Chen, and H. J. Christanto, "YOLOv7 for Face Mask Identification Based on Deep Learning," in 2023 15th International Conference on Computer and Automation Engineering (ICCAE), 2023, pp. 193-197.

- A. Afdhal, K. Saddami, S. Sugiarto, Z. Fuadi, and N. Nasaruddin, "Real-Time Object Detection Performance of YOLOv8 Models for Self-Driving Cars in a Mixed Traffic Environment," in 2023 2nd International Conference on Computer System, Information Technology, and Electrical Engineering (COSITE), 2023, pp. 260-265.

- D. Rivas, R. Quiza, M. Rivas, and R. E. Haber. (2020).Towards Sustainability of Manufacturing Processes by Multiobjective Optimization: A Case Study on a Submerged Arc Welding Process. IEEE Access, 8, 212904-212916. 10.1109/ACCESS.2020.3040196.

- J. Terven, D.-M. Córdova-Esparza, and J.-A. Romero-González. (2023, A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Machine Learning and Knowledge Extraction 5(4), 1680-1716.

- M. Hussain. (2023, YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 11(7), 677.

- Y. Liu, Y. Liu, X. Guo, X. Ling, and Q. Geng. (2025).Metal surface defect detection using SLF-YOLO enhanced YOLOv8 model. Scientific Reports, 15 (1), 11105. 10.1038/s41598-025-94936-9.

- R. Kaur, U. Mittal, A. Wadhawan, A. Almogren, J. Singla, S. Bharany, et al. (2025).YOLO-LeafNet: a robust deep learning framework for multispecies plant disease detection with data augmentation. Scientific Reports, 15 (1), 28513. 10.1038/s41598-025-14021-z.

- M. Hussain. (2024).YOLOv1 to v8: Unveiling Each Variant–A Comprehensive Review of YOLO. IEEE Access, 12, 42816-42833. 10.1109/ACCESS.2024.3378568.

- J. Zhu, D. Zhou, R. Lu, X. Liu, and D. Wan. (2025).C2DEM-YOLO: improved YOLOv8 for defect detection of photovoltaic cell modules in electroluminescence image. Nondestructive Testing and Evaluation, 40 (1), 309-331. 10.1080/10589759.2024.2319263.

- C. Chao, X. Mu, Z. Guo, Y. Sun, X. Tian, and F. Yong. (2025).IAMF-YOLO: Metal Surface Defect Detection Based on Improved YOLOv8. IEEE Transactions on Instrumentation and Measurement, 74, 1-17. 10.1109/TIM.2025.3548198.

Conflict of Interest Statement

The author declares no conflicts of interest.

Author Contributions

Data Availability Statement

Data are contained within the article.